Automatic recognition of ancient handwritten equations

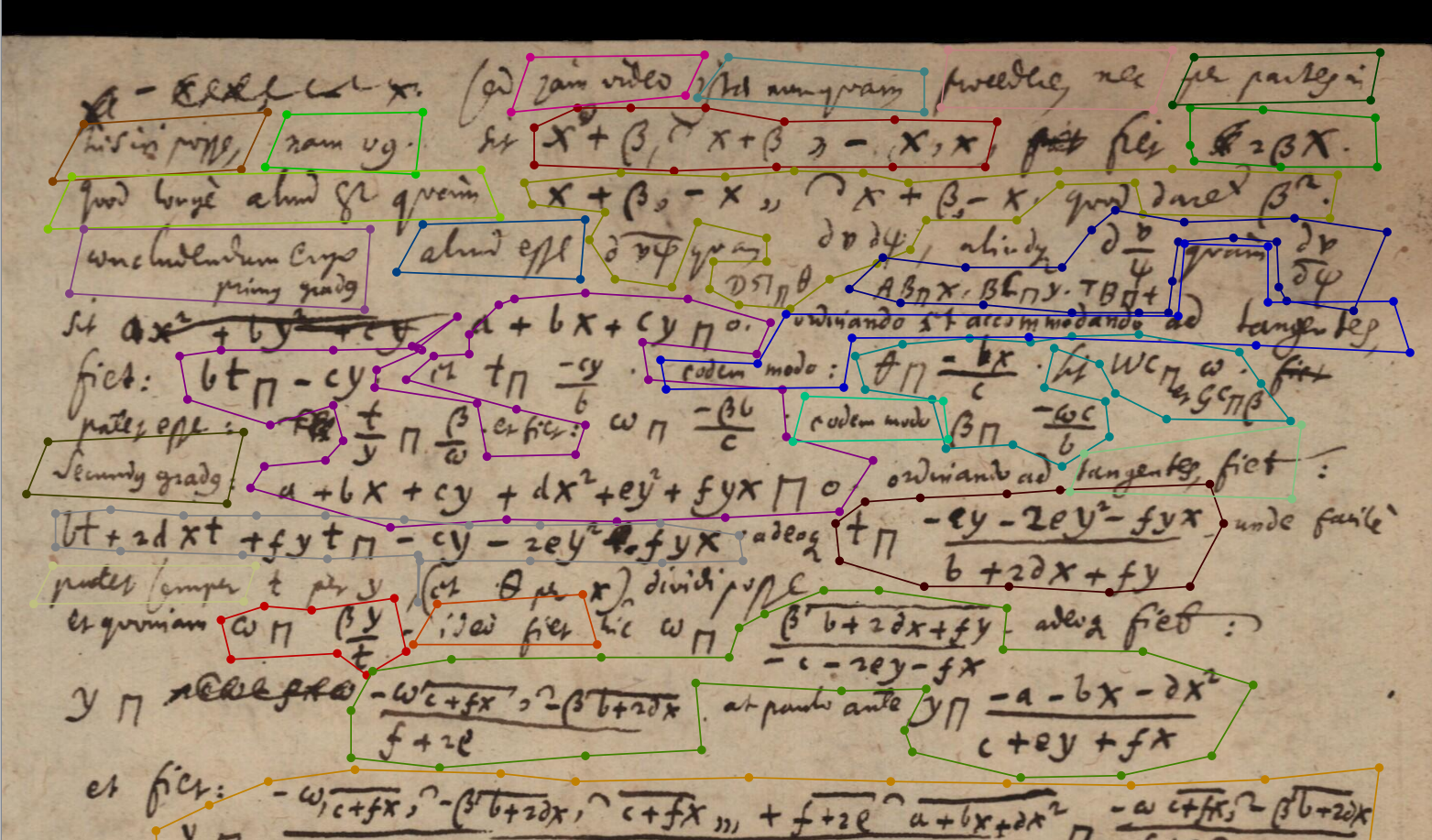

Done by Mamisoa Randrianarimanana and Xianxiang Zhang, supervised by Yejing Xie and Harold Mouchère, this research and development project focuses on the detection and segmentation of the mathematical expressions and equations that are in the manuscripts of Gottfried Wilhelm Leibniz.

Leibniz left us a wealth of manuscripts covering a large spectrum of knowledge concerning essentially mathematics and philosophy. Their exhaustive transcription would require decades of manual work by historians and transcription contributors. However, the notable advances in computing and deep learning offer an easier way to deal with this such a huge workload. In order to avoid partly manual transcription, automatic transcribing tools have already been put in place and are currently being used by research historians. They enable the text to be transcribed and the manuscripts to be edited digitally. For the moment however, they are unable to recognise and transcribe formulæ, equations and mathematical expressions. In fact, intertwining text and expressions are a big difficulty for artificial intelligence; none of them has been trained for this very specific task.

The need of doing a « total history » of the Leibniz's manuscripts is leading to the necessity of automatically transcribe and edit Leibniz's writings entirely, including formulæ, equations and mathematical expressions. First of all, the computer needs to detect and segment them with a specific layout analysis model. The sole fact of detecting and segmenting all the types of mathematical languages is a considerable forward step for automatical recognition; it will have a big influence on the final results.

Convolutional neural networks (CNNs) offer certain advantages over methods such as hidden Markov models (HMMs), transformers and probabilistic indexing methods for the task of detecting and segmenting text and mathematical expressions in images. Indeed, CNN is efficient at extracting features from local areas, which is very important for image segmentation tasks, as text and mathematical expressions are usually distributed at different locations in the image. CNN can automatically learn the features of different areas of the image, helping to accurately segment text and mathematical expressions. Secondly, CNN can be trained from start to finish, i.e. from the original input image to the final segmentation result, simplifying the whole model training and optimisation process.

In contrast, models such as HMMs and transformers often require additional steps or loss functions for end-to-end training. CNN also works well on large-scale datasets, whereas image segmentation tasks typically require large amounts of annotated data to train the model. The advantage of CNN is that it can learn more general features from large-scale data, thus improving model performance. In addition, the CNN can efficiently preserve spatial information from images, which is particularly important for image segmentation tasks. In addition, the CNN can efficiently retain spatial information from images, which is particularly important for image segmentation tasks. Text and equations often have specific spatial layouts and structures, and CNN can capture these features better, thus achieving more accurate segmentation.

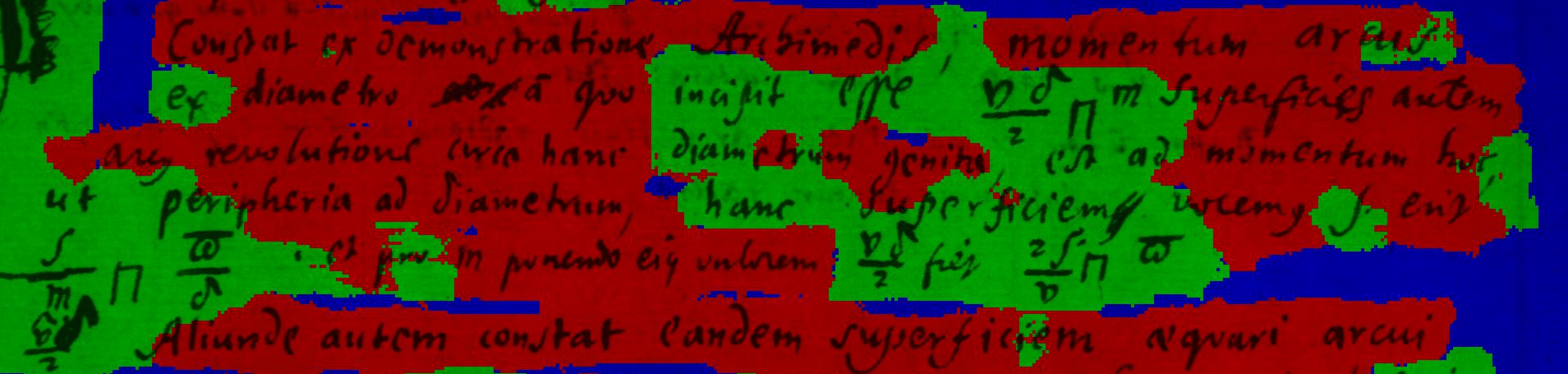

The main results of our experimentations are the following: on synthetic image with equations only, our model achieves 82% of correct predictions. With synthetic image with equations and text intertwined, but far apart from one another, the model fall to 70% of accuracy in the result. Finally, the model has only 61% of accuracy on a real image of Leibniz's draft, which is a good start but is far from a usable result. After the above exploration and evaluation, we found that our model had high accuracy for simple image segmentation processing. However, our model is not applicable in a very complex situations (e. g., real documents). The model still need to be loaded with new data for further improvements. In fact, one of the particularity of the CNN models is the greed of data it requires to work properly.

For further research, it would be interesting to extend the training to larger datasets. During our project, the limits of the training due to the small volume of data became very clear. Working with a larger dataset would make it possible to refine the model further. Also, to improve the analysis, it would be interesting to focus only on the pixels linked to the ink. This would minimise background noise and improve the clarity of the information extracted. By isolating only the pixels corresponding to the ink, the algorithms can concentrate on the interesting part of the manuscript content, thus improving detection and segmentation. Such an approach would improve the quality of layout analysis and could reveal subtle details that are often masked or altered by the heterogeneity of the drafts. By using only dark pixels, evaluation results will be more accurate.